Potential MSc topics

- Integration of 3DcityDB + Energy ADE 2.0 into a Solar Potential Analysis Engine

- Too cool or too hot? Cooling and heating demand scenarios based on the semantic 3D city model of Rotterdam

- IFC in PostgreSQL/PostGIS

- Creation of planar partitions from mismatched datasets

- Different heuristics for CGAL polygon repair

- Urban Mesh Segmentation

- 3D Reconstruction for Man-Made Urban Linear Objects

- Citizen Voices in Climate Action: Developing digital platforms for citizen engagement in climate planning and design

- Revealing energy inequalities in The Netherlands

- Developing an open-source GIS pipeline tailored for FastEddy

- Water (level) detection with ICESat-2 measurements

- Filling the massive gaps in space lidar datasets with a diffusion model

- Urban microclimate simulations using vegetation

- Urban microclimate simulations @ TU Delft

- Automatic creation of detailed building energy models from 3DBAG

- Developing an open-source Multifunctional Green Infrastructure Planning Support System

- Development of the client-side part of the 3DCityDB-Tools plugin for QGIS to support CityGML 3.0 data

- Adding support for CitySim to the 3DCityDB-Tools plugin for QGIS

- Accuracy assesment of EnergyBAG (in-house urban energy building simulation tool)

- Development of a Graphical User Interface for EnergyBAG in-house urban energy building simulation tool)

- The effects of building model automatic reconstruction methods for CFD simulations

- Development on quality assessment of point cloud datasets

- Using urban morphology to optimize biking and running routes in cities?

- Predicting pedestrian wind comfort and thermal comfort with Large-Eddy Simulations in uDALES

- Optimizing building mesh designs for computational fluid dynamics using machine learning

- To mesh or not to mesh: immersed boundary methods and porosity in OpenFOAM

- Semantically enriching the 3D BAG

- 3D Cadastre

- Performance and robustness of software libraries for computational geometry

- Something with streaming TINs for massive datasets

- Developing methods for edge-matching with customisable heuristics (geometric, topological and semantic)

- Modern metadata for CityJSON

- Supporting earthquake risk assessment by 3D city models

- Reconstructing 3D apartment units from legal apartment drawings

- 3D delineation of urban river spaces

- Estimating noise pollution with machine learning?

- How can 3D alpha wrapping be best used to repair buildings?

- Automatic generation of digital twins for heritage buildings

- Urban building daylight modeling – improving city models

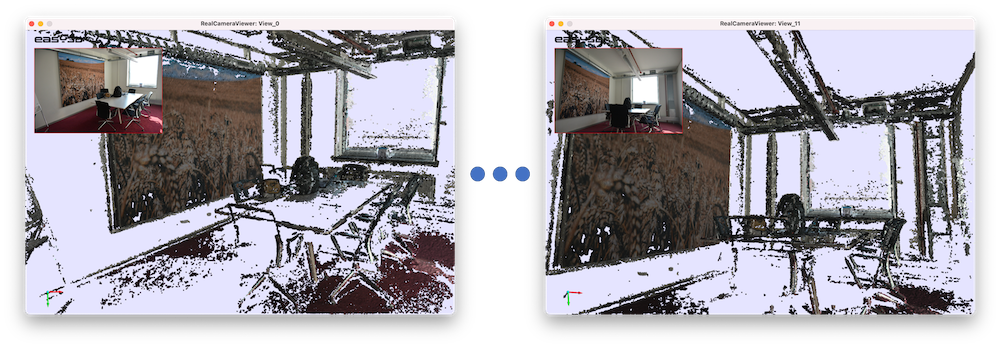

- Reconstructing permanent indoor structures from multi-view images

- BuildingBlocks: Enhancing 3D urban understanding and reconstruction with a comprehensive multi-modal dataset

- Holistic indoor scene understanding and reconstruction

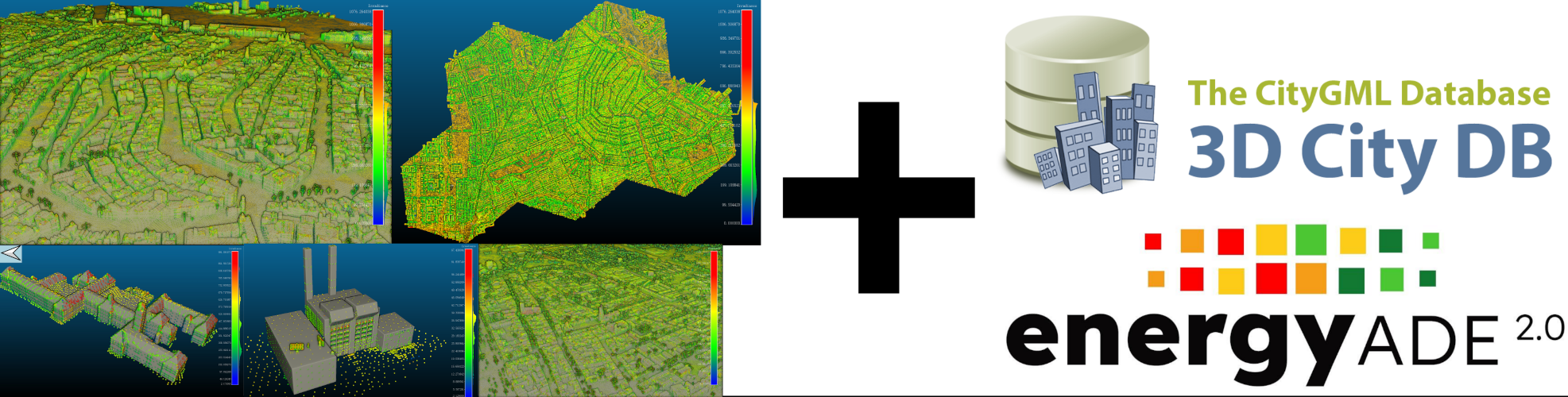

Integration of 3DcityDB + Energy ADE 2.0 into a Solar Potential Analysis Engine

This MSc thesis topic builds upon the work in Longxiang Xu’s MSc thesis project, “High-resolution, large-scale, and fast calculation of solar irradiance with 3D City Models”. Therefore, it is planned to extend, review, and update current functionalities, and to add new ones. Among the new functionalities expected are: • Provide support for loading CityObjects into the simulation scene via a 3DcityDB instance. • Enable writing results to a 3DCityDB + Energy ADE 2.0 instance. • Allow selection of CityObjects for simulation (i.e., computing the solar potential of a selected building within a scene). • Generation of appearances based on the simulation results and export them to a 3DcityDB instance.

Attendance of elective course GEO5014 in Q5 is highly suggested, as relevant topics needed for this thesis will be covered. Proficiency in programming is a plus, preferably C++. Before picking the topic, please contact us!

Contact: Camilo León Sánchez, Giorgio Agugiaro,

Too cool or too hot? Cooling and heating demand scenarios based on the semantic 3D city model of Rotterdam

This thesis is embedded within the European project DigiTwins4PEDs, which investigates how Urban Digital Twins can be exploited to foster the trasformation of urban districts into PEDs (Positive Energy Districts). As the municipality of Rotterdam is one of the project partners, the topic of the thesis will be to investigate how different scenarios of energy demand for heating and cooling can be computed and managed for the building stock using the CityGML-based 3D city model of Rotterdam. Additionally, a “smart” and systematic way to deal with scenarios (data, metadata, results, etc.) will have to be developed within the thesis.

Attendance of elective course GEO5014 in Q5 is highly suggested, as relevant topics needed for this thesis will be covered.

You will programm mainly in Python, and you will interact with the 3DCityDB using a bit of PL/pgSQL, too. Before picking the topic, please contact us!

Contact: Camilo León Sánchez, Giorgio Agugiaro,

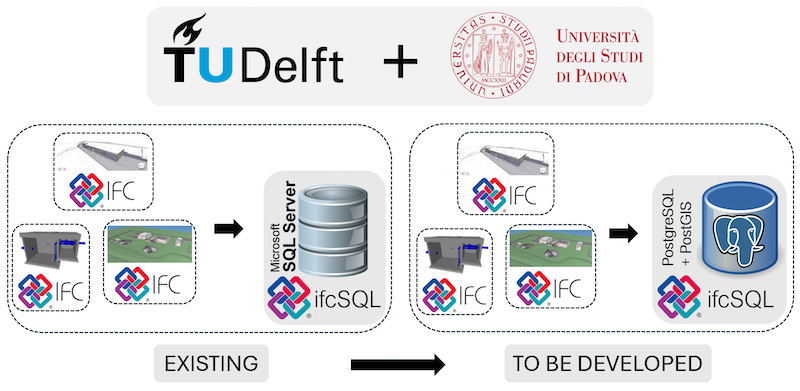

IFC in PostgreSQL/PostGIS

The Industry Foundation Classes (IFC) schema is a widely used open standard for Building Information Modeling (BIM). IFC data mostly rely on files for storage, but storing such data in databases has the potential to improve the handling multiple - possibly very large - datasets, performing therefore spatial and non-spatial queries and integrating them with other systems.

Database implementations of IFC exist, such as the one inside BIMserver, but perhaps the most interesting one is IfcSQL, which currently runs only on Microsoft SQL Server. However, Microsoft SQL is not open and provides very limited spatial functionalities (e.g. no 3D support at all), which means that only very basic spatial queries can be run – and limited to 2D.

Two are the main goals of this thesis: the first one is to port the database schema of IfcSQL to PostgreSQL/PostGIS. The second one is to investigate how the spatial functionalities of PostGIS can be exploited for IfcSQL, either directly at database level (e.g. via PL/pgSQL functions) or by developing a python-based interface. The usability of the developed solution will be tested in the context of water infrastructure management use cases and in collaboration with the University of Padua, Italy, and the development team of IfcSQL.

Requirements: GEO1006 and GEO1004, suggested: GEO5014

Contact persons: Ken Arroyo Ohori, Giorgio Agugiaro

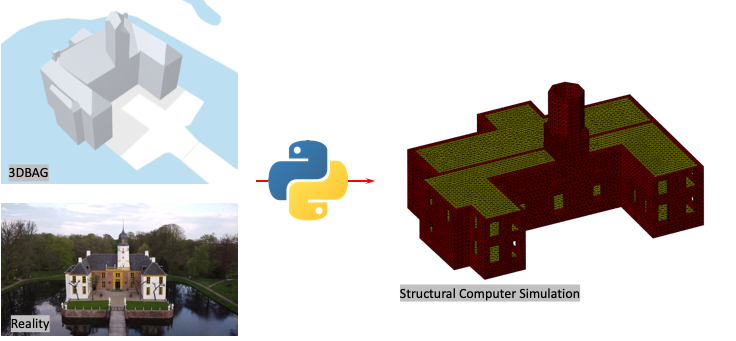

Creation of planar partitions from mismatched datasets

Many GIS applications are based around a planar partition of polygons—a set of polygons covering the map with no overlaps and no gaps between them. For example, 3D city models are generally built by raising polygons representing building footprints, roads, water bodies, etc. to different heights according to different rules.

However, good planar partition datasets are relatively rare. For many countries and cities, there’s only linear data for many features (e.g. roads, railways, rivers, etc). In other cases, the different features come from different sources and do not fit neatly together. Finally, there’s also data that is missing altogether and can only be computed based on the gaps in other data using more complex rules (e.g. roads from the space between parcels or terrain from the remainder of all other features.

The goal of this thesis would be to create a robust method to create planar partitions from multiple datasets based on customisable rules (e.g. line buffers, priority lists, Boolean set ops, etc). Another possibility would be a narrower thesis focussing on creating more detailed data for only one of these types, such as in this thesis.

Requirements: proficiency in programming, preferably with C++.

Contact: Ken Arroyo Ohori

Different heuristics for CGAL polygon repair

Invalid polygons are a common headache for GIS practitioners. There are a number of methods and tools to deal with them, including the new Polygon repair package of CGAL.

Included in that package, so far there’s only one repair method based on the odd-even rule. This works by starting from the exterior of the polygon and every time that a line is crossed one switches from the exterior to the interior of the polygon and vice versa. However, this is not a one-size-fits-all problem and it would be best to have several repair methods based on different heuristics, such as Boolean set union (merger of all rings into one shape) or difference (inner rings should always carve out holes in the polygon).

The goal of this thesis would be to investigate what other heuristics are useful in practice and to implement them.

Requirements: proficiency in C++ programming, some familiarity with CGAL would be desirable.

Contact: Ken Arroyo Ohori

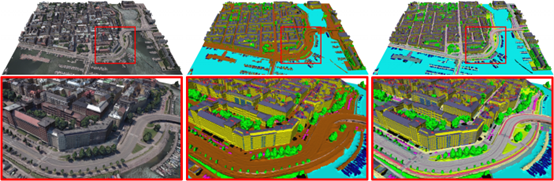

Urban Mesh Segmentation

The aim of this research is to develop instance or semantic segmentation methods for urban meshes. We will equip students with datasets, annotation tools, backbone networks, and pre-formulated ideas to facilitate the successful completion of this project. Your task is to enhance the performance of existing 3D segmentation methods to achieve better results on urban textured mesh data. You will learn about the most advanced deep learning techniques for 3D semantic segmentation tasks and/or interactive 3D annotation strategies. The outcomes of this research are eligible for publication in high-quality journals or conference proceedings. Additionally, students have the freedom to choose an additional co-supervisor. Participants in this project can select from the following three research directions:

- Develop automatic or interactive instance segmentation methods for urban objects.

- Develop semantic segmentation methods for urban meshes and their textures.

- Develop a local adaptive receptive filed approach for 3D semantic segmentation of urban scenes.

Requirements: 1) Experiences in mesh processing. 2) Proficiency in Python or C++ programming. 3) Knowledge of machine learning or deep learning.

Contact: WeixiaoGao, Hugo Ledoux.

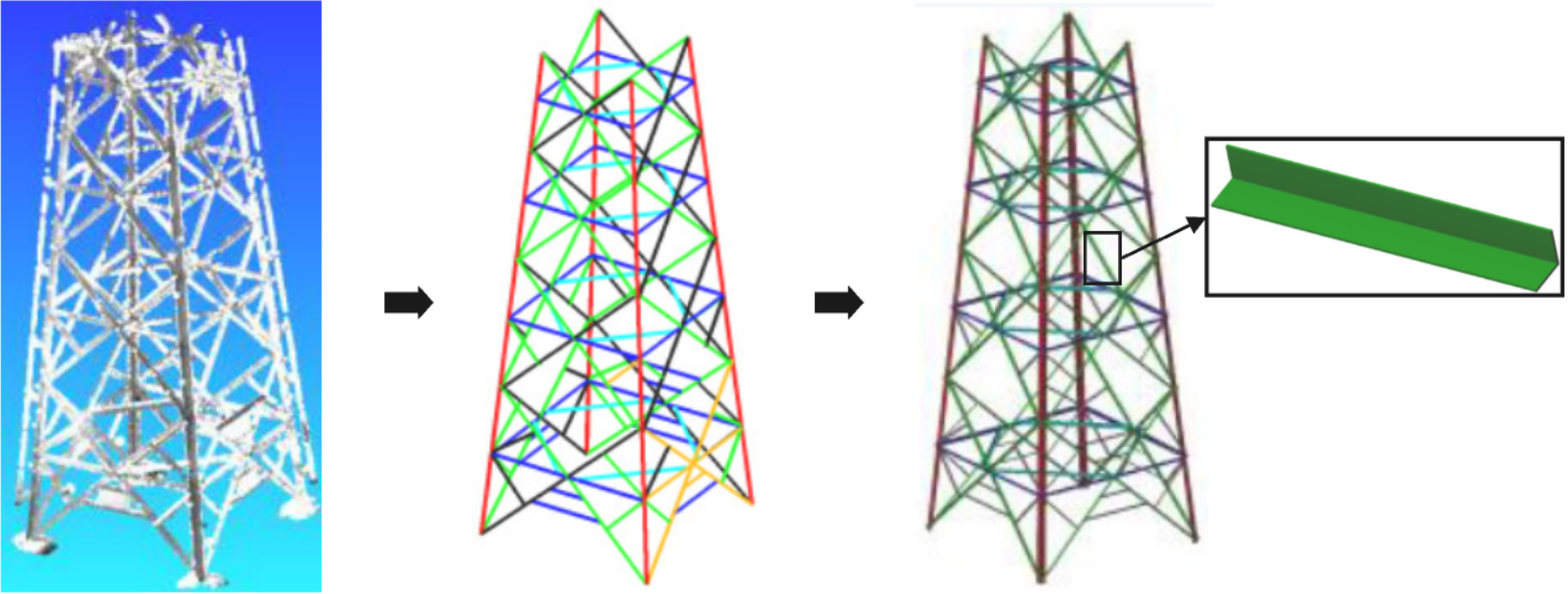

3D Reconstruction for Man-Made Urban Linear Objects

The objective of this research is to develop a deep learning-based 3D wireframe reconstruction method tailored for man-made urban linear objects. We will provide datasets, relevant algorithms, and clear research guidelines to ensure successful project completion by students. Your task will be to adapt existing 3D reconstruction algorithms for various urban linear objects such as pylons, wind turbines, lamp poles, or lattice towers. You will learn state-of-the-art 3D reconstruction techniques and their urban applications. Research outcomes may be published in high-quality journals or presented at conferences.

Requirements: 1) Experiences in 3D reconstruction and deep learning. 2) Proficient in Python or C++ programming.

Contact: WeixiaoGao, Hugo Ledoux.

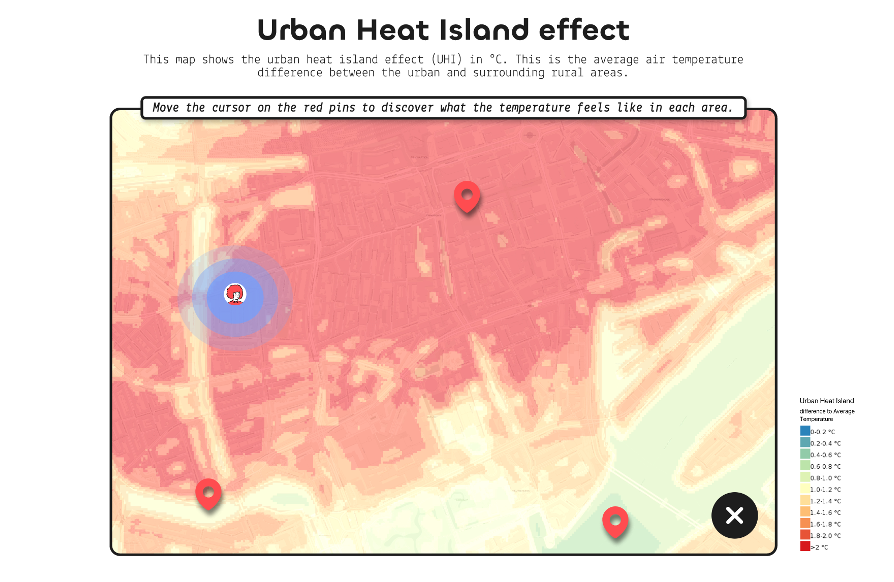

Citizen Voices in Climate Action: Developing digital platforms for citizen engagement in climate planning and design

Municipalities worldwide are developing plans and strategies to deal with increasing climate risks. Many of these interventions require citizen support and active participation, e.g., adopting solar PV panels or green roofs or increasing biodiversity in private backyards. Other strategies require people to change their behaviour and social norms. There is, therefore, a need to meaningfully engage citizens in climate strategies. Digital tools provide a means to do so with the potential to reach a large number of citizens. In this context, the Citizen Voice Initiative has developed several prototypes for citizen engagement in urban planning and design: (1) Citizens meet Climate (CmC): A digital participatory platform to empower citizens to take climate action and (2) BIO-CiVo: A digital platform to support citizens in improving neighbourhood biodiversity. Navigate through the prototypes:

BIO-CiVo-Evaluation Tool, and BIO-CiVo-Building Tool

The MSc thesis will develop these prototypes into real platforms. This entails (1) translating the prototypes from Figma to URL in a static form and (2) progressively developing the interactive features, which include e.g., maps (present in all prototypes), the use of different types of spatial data (climate data in the CmC prototype) and 3D environment (BIO-CiVo-Building).

If you choose this topic, you can expect to learn about citizen engagement, web development, and spatial data handling. Programming experience and interest are advantages of this topic.

Contact: Clara Garcia-Sanchez, Juliana Goncalves

Revealing energy inequalities in The Netherlands

With technological advances and decreasing prices, solar energy is a key technology in the urban energy transition. The current policy focus on increasing the overall installed capacity via financial mechanisms has overshadowed energy justice considerations, leading to inequalities in solar energy adoption. This pattern of inequality is bound to deepen as financial mechanisms continue to be the preferred policy choice for other energy transition interventions, such as heat pumps or renovation incentives.

The MSc thesis will delve into questions of energy justice through the use of spatial data. To start the topic, you will delve into the work by Kraaijvanger et al., (2023), which reveals socio-spatial inequalities in the transition to solar energy in The Hague, The Netherlands. You will extend their work in one of these two directions (1) go beyond solar PV to look into heat pumps and renovation incentives, or (2) go beyond The Hague and create a map of energy inequalities in The Netherlands. The direction depends on your interests as well as on data availability.

If you choose this topic, you can expect to learn about spatial justice, energy transition technologies, and spatial analysis.

Figure: (a) Spatial distribution of the four access groups across the The Hague per PC5 zone. A short description of the characteristics of each group/cluster is provided in the legend presented in the top left of the figure. (b) The upper table in Figure b provides the mean values of the clusters for each of the indicators. This is compared with the average values for the respective indicator observed in the city. The lower table in Figure b presents the adoption rate across each cluster. The adoption rate (%) is defined as the percentage of residential buildings with solar PV systems (Kraaijvanger et al., 2023).

Contact: Clara Garcia-Sanchez, Juliana Goncalves

Developing an open-source GIS pipeline tailored for FastEddy

Within the past year, we have been actively collaborating with the National Center for Atmospheric Research (NCAR) which recently developed Fast Eddy, a resident GPU code, that is capable of running large urban microclimate simulations with high efficiency. Our collaboration aims to develop an open-source GIS pipeline that allows the automatic reconstruction of urban environments that can be swiftly prepared and used within their Fast Eddy framework.

The MSc thesis will entail the full chain of the tailored automatic reconstruction (related to the work performed within GEO1004) starting by exploring the impact that the different projections available within their boundary software WRF can have, the available footprint and point cloud data in the areas of interest, and finishing by the translation into the language that FastEddy uses netcdf. The thesis does NOT include running their fluid dynamics code.

If you choose this topic, you can expect to learn about automatic geometry reconstruction and GIS data handling. Programming experience and interest is an advantage for this topic. Your work might require to implement source code (in C++ or Python or any other language you prefer).

Contact: Clara Garcia-Sanchez, Hugo Ledoux

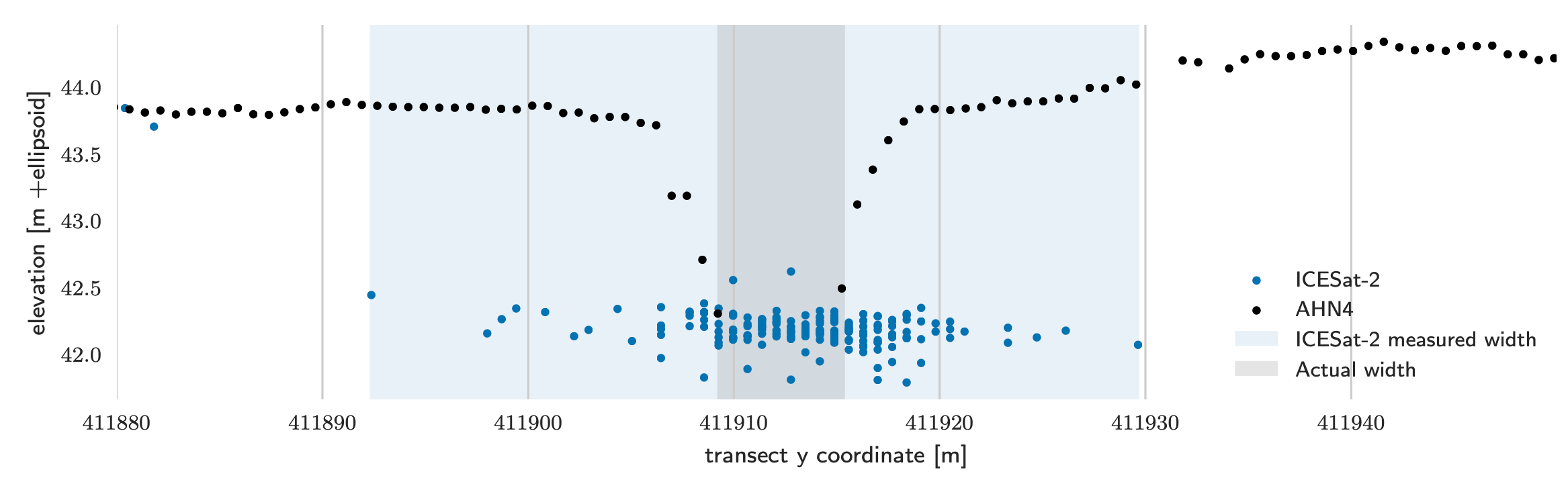

Water (level) detection with ICESat-2 measurements

ICESat-2, a spaceborne lidar system, can measure terrain elevations every 0.7m along its ground track. As such, it can be seen as a profiling lidar, drawing cross-sections over the Earth. When combined with a water mask, it can also be used to measure water levels. For this topic we will investigate automatic water (level) detection methods, making use of the multiple return pattern that ICESat-2 exhibits over strong reflectors such as water (see figure). If successful, we could update and expand mangrove and wetland maps (which are now based on optical imagery) critical for conservation purposes and carbon stock models. The method(s) should be able to scale to the full ICESat-2 dataset (1 PB), and therefore ideally be implemented in the Julia programming language (if you know C++ and Python it’s not very difficult to learn Julia, we can help).

Contact: Maarten Pronk + Hugo Ledoux

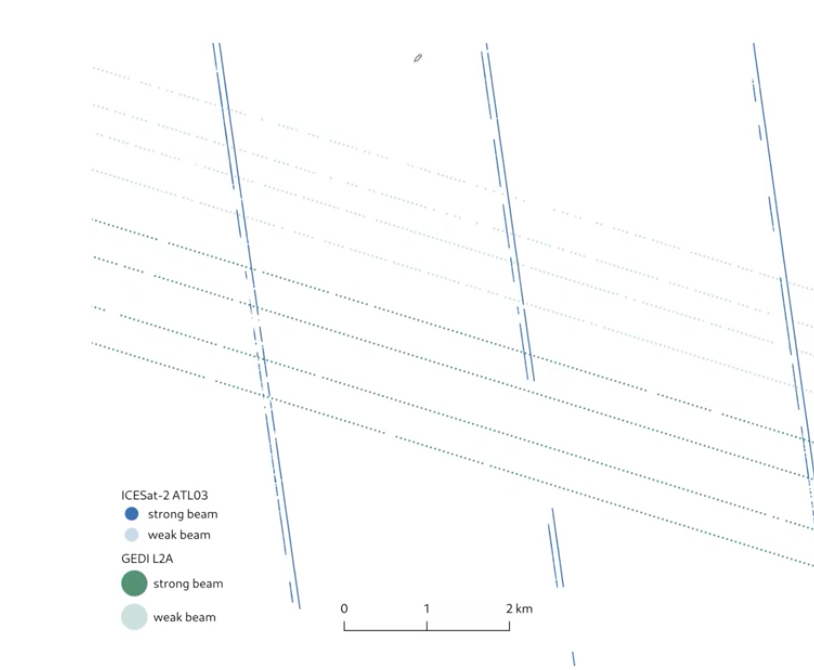

Filling the massive gaps in space lidar datasets with a diffusion model

As you saw during the GEO1015 lecture of Maarten Pronk, space lidar datasets, ICESat-2 and GEDI, have very sparse distribution (often kilometres with no data) and thus a global coverage is difficult.

The aim of this thesis is to test, compare to others, and further develop the deep learning diffusion model presented in this paper.

The project has open-source code, Python can be used.

Contact: Hugo Ledoux + Maarten Pronk

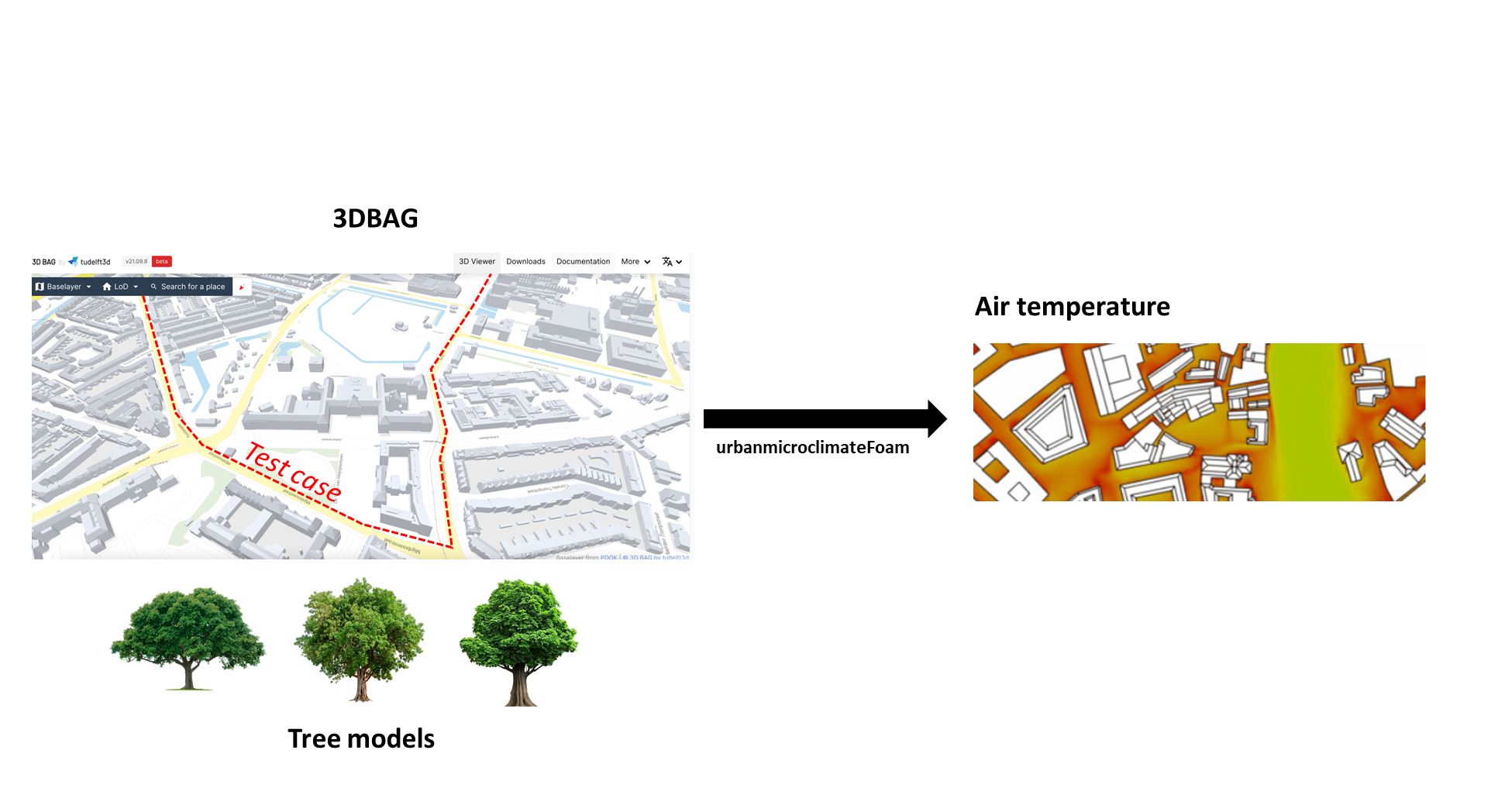

Urban microclimate simulations using vegetation

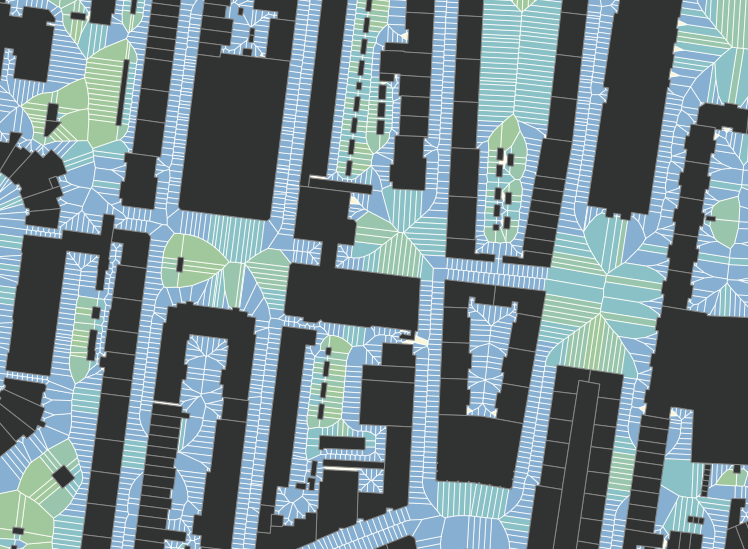

The aim of this project is to use the OpenFoam solver created by the ETH Zurich to perform simulations of urban microclimates at the neighbourhood scale to test several vegetation models (here). Simulations should be performed over the university campus of TU Delft using the City4CFD as an input for building geometry. From the outcomes of this MSc thesis, it will be expected to:

- Generate the simulation domain and meshing from a set of buildings that can be selected in the 3DBAG;

- Specify boundary conditions from weather data;

- Perform simulations on a test case located in the university campus of TU Delft;

- Study the outdoor air temperature resulting from various vegetation models that are specified in the urbanMicroclimateFoam solver.

The prerequisites for this project are basic knowledge in CFD simulations, programming skills in C/C++, and experience in using Unix operating system.

Contact: Miguel Martin & Clara Garcia-Sanchez

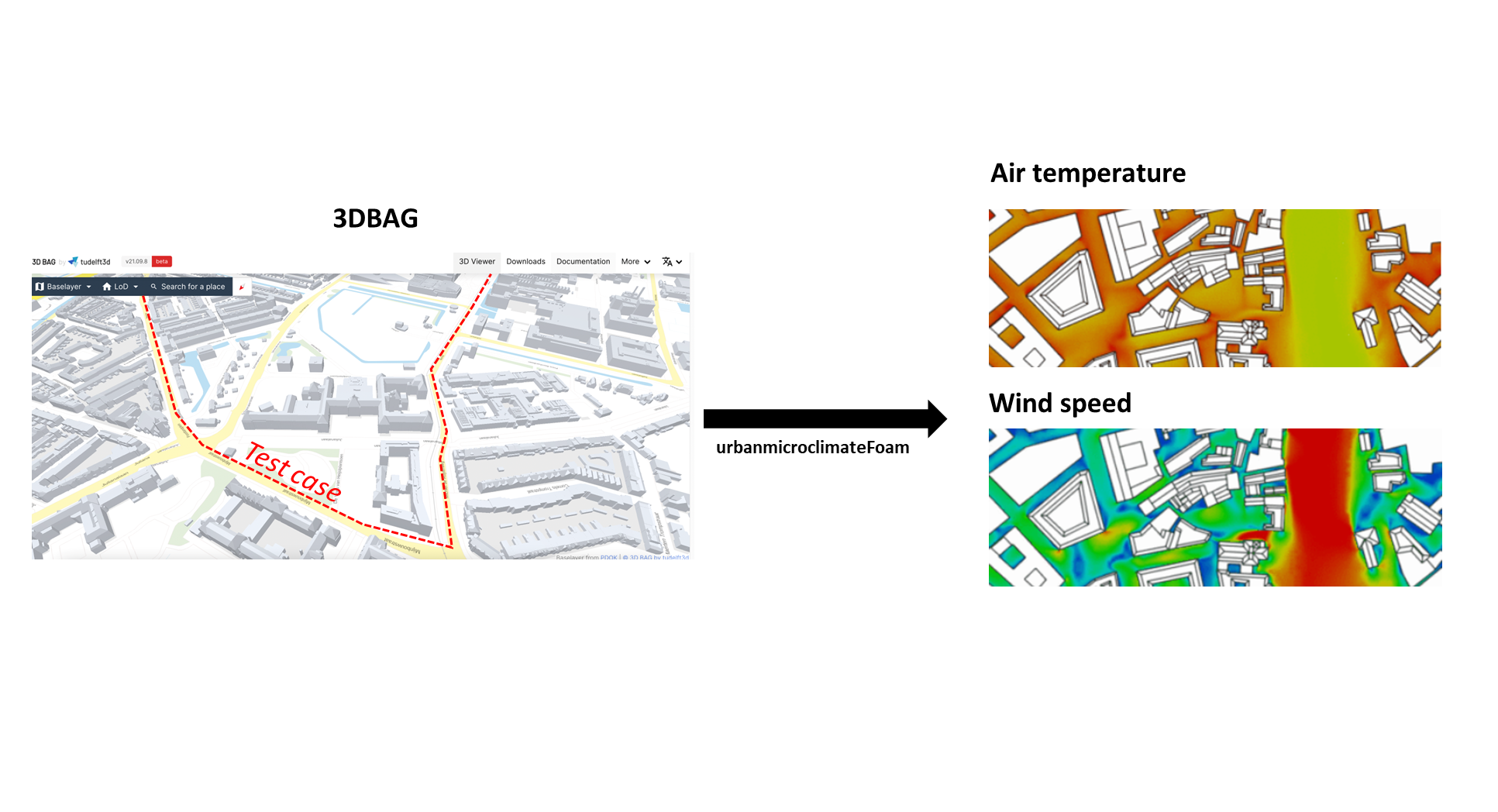

Urban microclimate simulations @ TU Delft

The aim of this project is to use the OpenFoam solver created by the ETH Zurich to perform simulations of urban microclimates at the neighbourhood scale (here). Simulations should be performed over the university campus of TU Delft using the City4CFD as an input for building geometry. From the outcomes of this MSc thesis, it will be expected to:

- Generate the simulation domain and meshing from a set of buildings that can be selected in the 3DBAG

- Specify boundary conditions from weather data;

- Perform simulations on a test case located in the university campus of TU Delft; and

- Study the various output variables that can be obtained from the urbanMicroclimateFoam solver.

The prerequisites for this project are basic knowledge in CFD simulations, programming skills in C/C++, and experience in using Unix operating system.

Contact: Miguel Martin & Clara Garcia-Sanchez

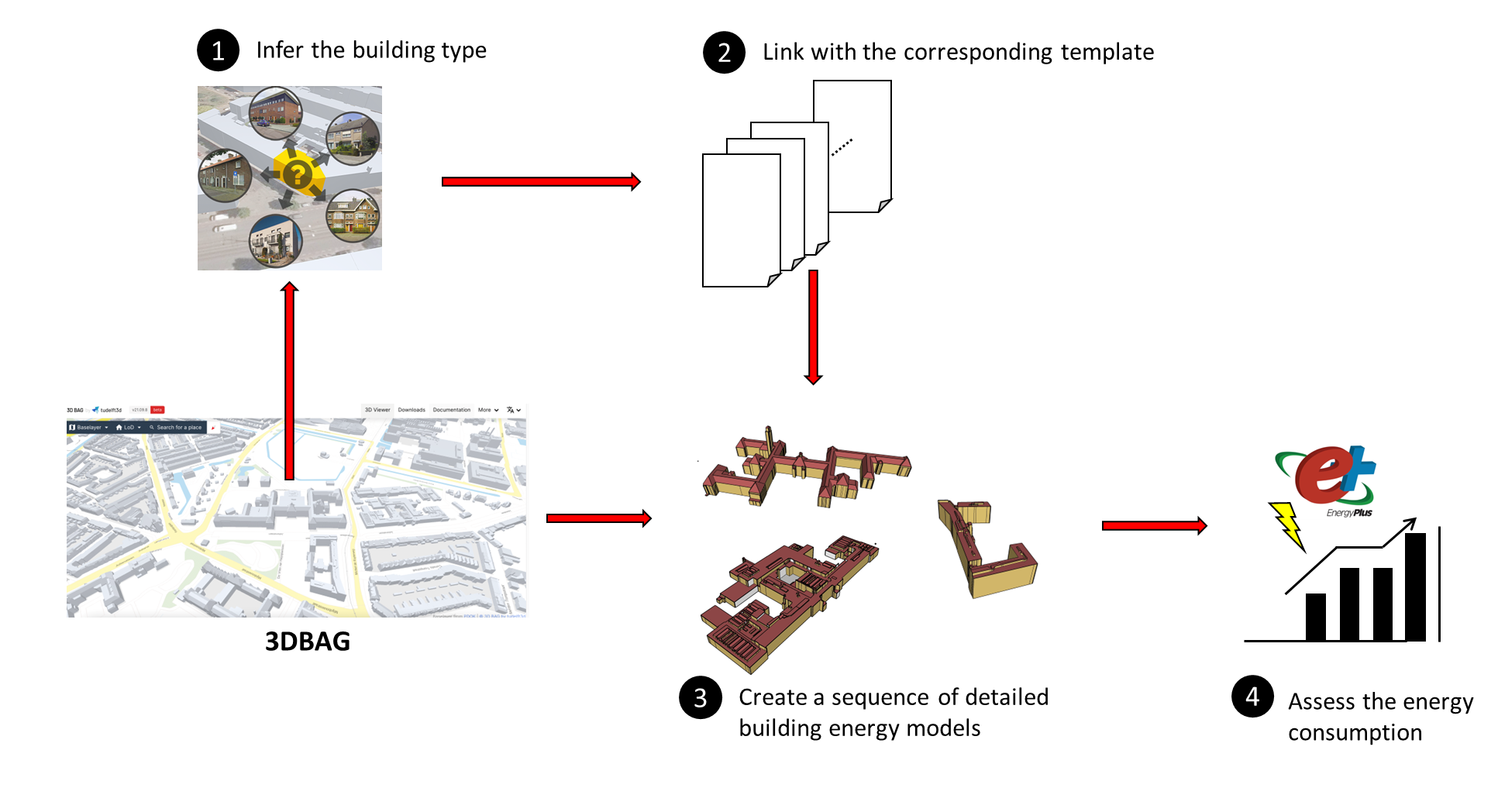

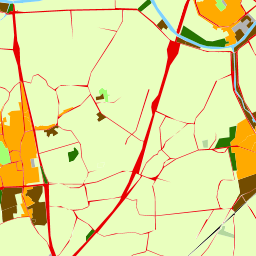

Automatic creation of detailed building energy models from 3DBAG

The aim of this project is to develop a procedure from which a sequence of detailed building energy models can be automatically created from the 3DBAG. From the models, it should be possible to estimate the energy consumed by buildings at the neighbourhood scale using the EnergyPlus simulation program (here). The procedure should be implemented in Python. This project is a continuity of another MSc thesis on inferring the residential building type from 3DBAG (here). From the outcomes of this MSc thesis, it will be expected to:

- Identify material properties, internal heat gains, and HVAC systems for each type of buildings;

- Include these information in EnergyPlus template files;

- Automatically create a sequence of detailed building energy models from EnergyPlus template files and 3DBAG;

- Perform simulations (in sequence or in parallel) to assess the energy consume by buildings at the neighbourhood scale and evaluate retrofitting strategies.

The prerequisites for this project are basic knowledge in building physics and programming skills in Python.

Contact: Dr. Miguel Martin; Camilo Leon-Sanchez

Developing an open-source Multifunctional Green Infrastructure Planning Support System

Urban areas face increasing pressure to address multiple environmental and social challenges simultaneously, including climate change adaptation, biodiversity loss, social inequality, and public health concerns. Traditional infrastructure planning approaches often address these issues in isolation, leading to inefficient resource allocation and missed opportunities for synergistic solutions.

Multifunctional Green-Blue Infrastructure (GBI) offers a nature-based approach that can simultaneously deliver multiple ecosystem services across different spatial scales. However, current planning processes lack integrated digital tools that can effectively guide stakeholders through collaborative decision-making while ensuring equitable distribution of benefits and addressing diverse societal needs. The primary objective of this research is to develop an innovative interactive geospatial planning support system that facilitates collaborative evidence-based decision-making for multifunctional green-blue infrastructure implementation in urban areas.

If you are interested in this topic, you can expect to learn about advanced geospatial technologies and methodologies including multi-criteria spatial analysis, web-based GIS development, and spatial decision support system design. Through this interdisciplinary approach, you will gain valuable experience in translating complex spatial analysis into accessible decision-making tools, preparing you for careers in smart city development, environmental consulting, urban planning technology, or spatial data science with a focus on sustainability and social equity.

Prerequisites: Proficiency in GIS and software development (e.g., QGIS, R, Python) and understanding of/interest in GBI and urban/landscape planning.

Contact: Daniele Cannatella

Development of the client-side part of the 3DCityDB-Tools plugin for QGIS to support CityGML 3.0 data

The 3DCityDB-Tools plugin for QGIS allows to conveniently use CityGML/CitySON data stored in the free and open-source 3D City Database (3DCityDB). For the new 3DCityDB v.5.0, which introduces support for CityGML 3.0., a MSc thesis has already investigated and developed the (PostgreSQL-based) server-side part of the plugin.

The scope of this thesis is build upon it and to develop the client-side part of the plugin, thus facilitating the user’s interaction with CityGML 3.0 data in the database using the usual QGIS GUI, instead of writing SQL commands.

Attendance of elective course GEO5014 in Q5 is highly suggested, as relevant topics needed for this thesis will be covered.

You will programm mainly in Python, you will learn how to use the Qt libraries, and you will need to use (a bit of) PL/pgSQL, too. Before picking the topic, please contact us!

Contact: Giorgio Agugiaro

Adding support for CitySim to the 3DCityDB-Tools plugin for QGIS

CitySim is an open-source simulation software to perform different energy simulations for buildings/districts (e.g. space heating energy demand, solar irradiation, urban heat islands). The 3DCityDB-Tools plugin for QGIS allows to conveniently use CityGML/CityJSON data stored in the free and open-source 3D City Database (3DCityDB). The scope of this thesis is to extend to QGIS plugin in order to allow for data preparation, run CitySim’s energy simulations, collect and analyse of the simulation results from within QGIS by developing a GUI dialog and extending a bidirectional interface between the two software packages. A python-based first prototype of the interface was already partially developed in a thesis completed in June 2022. The ultimate goal is to allow for a seamless flow of information and to perform energy simulations in CitySim exploting the added value of a semantic 3D city model encoded using the CityGML data model.

The thesis is a collaboration between the 3DGeoinformation group and the Idiap Research Institute in Switzerland. Attendance of elective course GEO5014 in Q5 is highly recommended, as many relevant topics needed for this thesis will be covered.

You will programm in Python and in PL/pgSQL. Before picking the topic, please contact us!

Contact: Giorgio Agugiaro, Camilo León Sánchez

Accuracy assesment of EnergyBAG (in-house urban energy building simulation tool)

The aim of this MSc thesis is the evaluation of the output EnergyBAG, an in-house urban energy building simulation software tool (UBES) develop by Camilo León-Sánchez during his PhD research.

The scope of the research is to asses the accuracy of the results obtained by the UBES while computing the energy demand of buildings while pointing possible source of errors in the workflow. It is expected to use other existing UBES in this research such as SimStadt, CitySim, City Energy Analyst (CEA) or EnergyPlus, to mention some.

Attendance of elective course GEO5014 in Q5 is highly recommended, as many relevant topics needed for this thesis will be covered.

Contact: Camilo León Sánchez, Giorgio Agugiaro

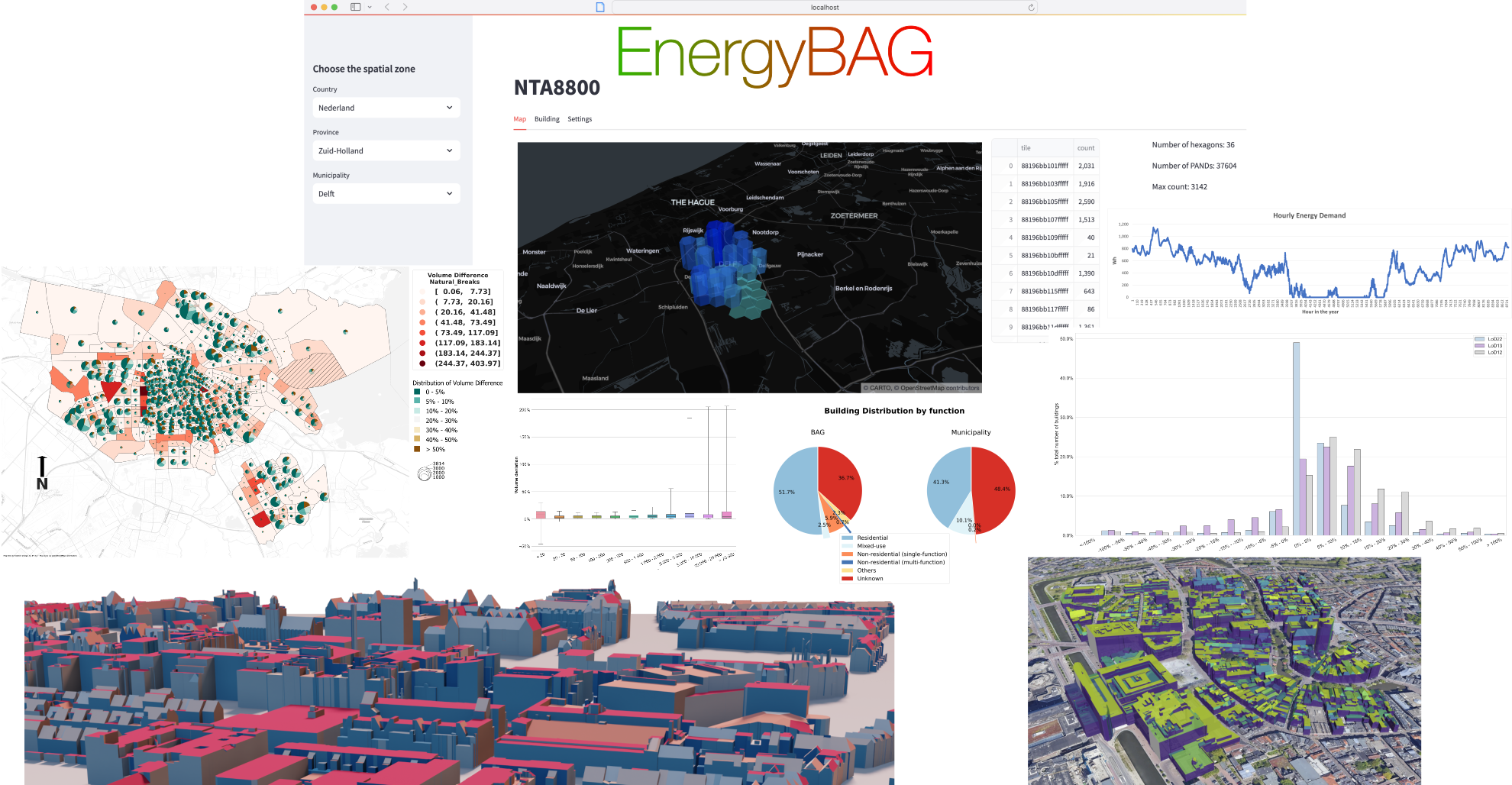

Development of a Graphical User Interface for EnergyBAG in-house urban energy building simulation tool)

The scope of this MSc thesis is the design and development of a web-based Graphical User Interface (GUI) that enables the interaction with EnergyBAG, an in-house urban energy building simulation software tool (UBES) develop by Camilo León-Sánchez during his PhD research.

Within its functionalities, the GUI should allow 3D visualization of semantic 3D city models (3DCM) and generate graphs and plots that aggregate the output of the simulation tool. Data are managed in a 3DCityDB instance that supports the Energy ADE.

Attendance of elective course GEO5014 in Q5 is highly recommended, as many relevant topics needed for this thesis will be covered.

Contact: Camilo León Sánchez, Giorgio Agugiaro

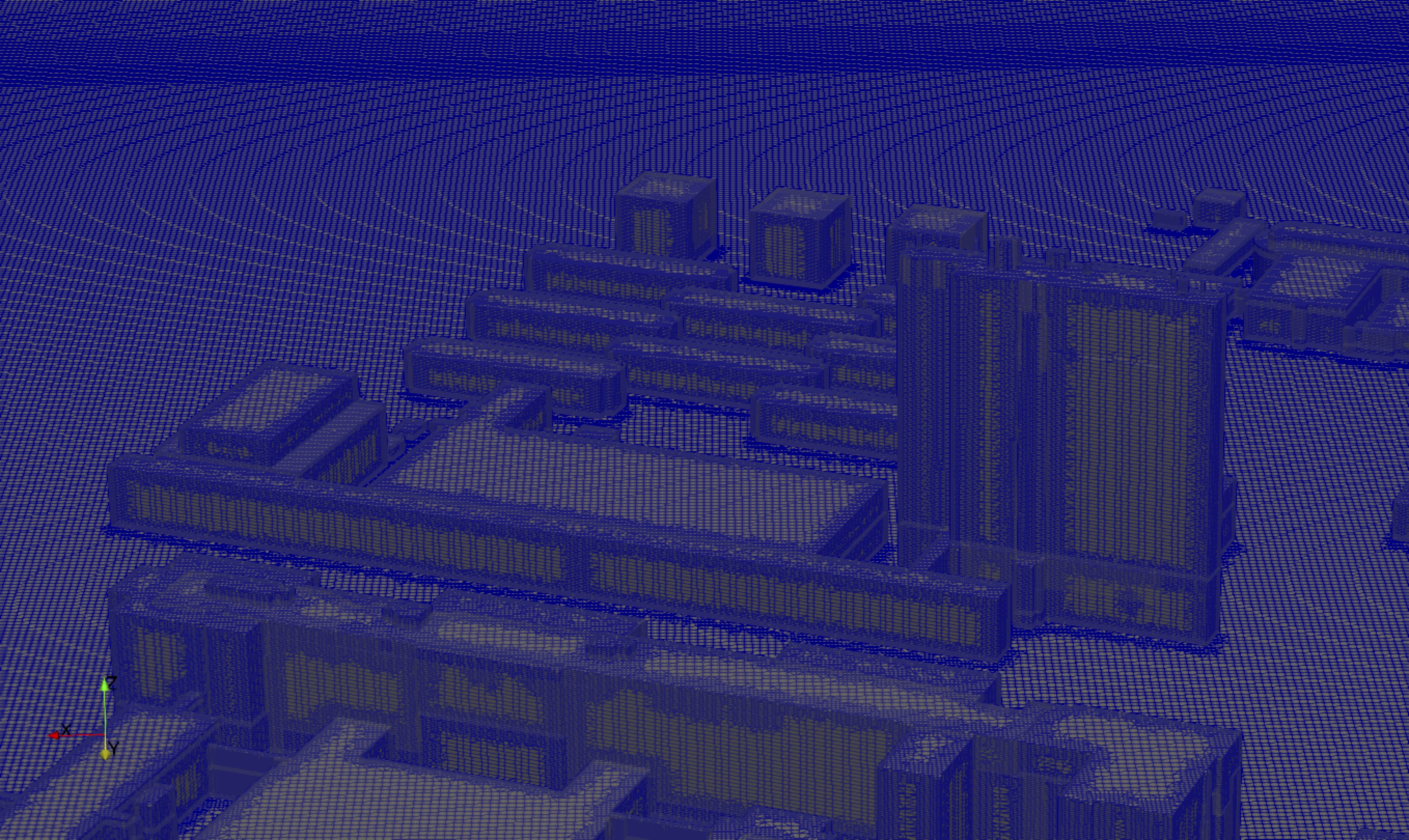

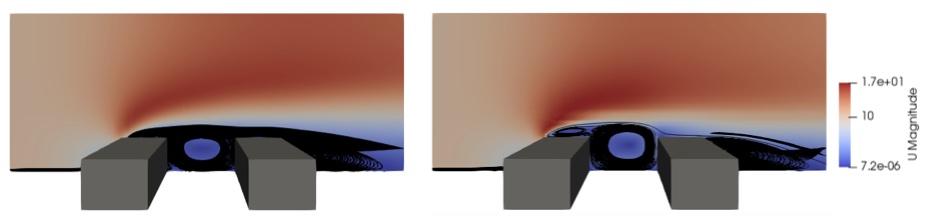

The effects of building model automatic reconstruction methods for CFD simulations

The digitalization of the architecture and the build environment means that there is a new wealth of digital data that can help generate city scale models. However, not all these sources allow for models to be created of the same quality. The effects of different quality or alternatively abstracted models on CFD has been sparsely evaluated. Even more rare is the evaluation of models that fall outside of the established LoD frameworks e.g., voxelated and marching cubed models.

In this thesis these different models and their effects on CFD simulations will be evaluated. Based on these results it might be possible to do suggestions or set up rules to describe viable models that can be used for CFD processing. Extensions to other parts of City analysis could be made as well if desired.

If you choose this topic you can expect to work with building models, voxelization and computational fluid dynamic simulations performed with OpenFOAM.

Contact: Clara Garcia-Sanchez, Jasper van der Vaart

Development on quality assessment of point cloud datasets

Rijkswaterstaat is transitioning into a data-driven organization, with 3D point cloud data playing a crucial role in the work processes and digital twin vision.The effectiveness of the current research project, which focuses on merging different 3D point clouds into the Integrated Heightdataset of the Netherlands (IHN), relies on the validation process as a key component. Therefore the focus of this MSc thesis is to research the possibilities for validating the quality of point clouds received from large infrastructure projects like the Moerdijkbridge zone or tunnels in the area of Rotterdam. If you choose this topic, you will have the opportunity to work for the Department of Advice and Validation of Geodata at Rijkswaterstaat, with access to numerous amount of elevation data products and the opportunity to contribute to the digital twin & IHN research projects.

Programming experience and interest is an advantages for this topic. Your work might require to implement source code for the analysis on different large point clouds (in C++, Python or any other language you prefer).

Contact: Daan van der Heide, Jantien Stoter

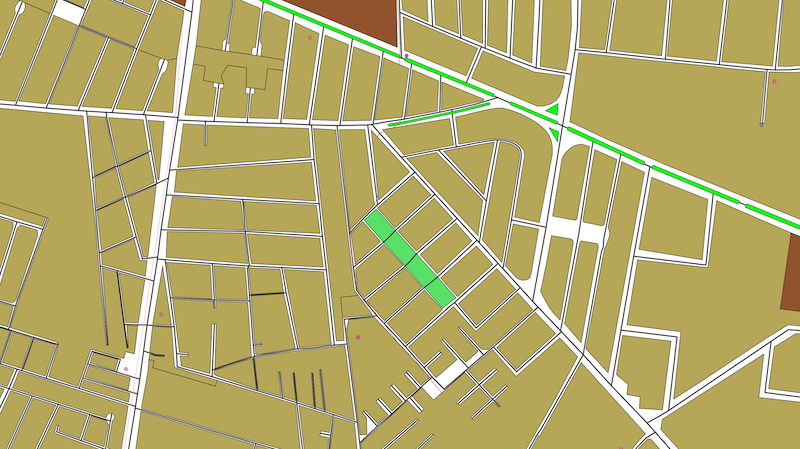

Using urban morphology to optimize biking and running routes in cities?

RIVM monitoring tool NSL tool, provides information regarding air pollution in streets for the most areas in the Netherlands. The tool uses urban morphology along other parameters to estimate local air quality.

Within this MSc thesis we will exploit urban morphologies and NSL monitoring to optimize running and biking paths within Netherlands urban areas. For that open source tools such as momepy, and previously developed approaches such as de Jongh thesis (see image attached) will be explored.

Contact: Clara Garcia-Sanchez, Hugo Ledoux

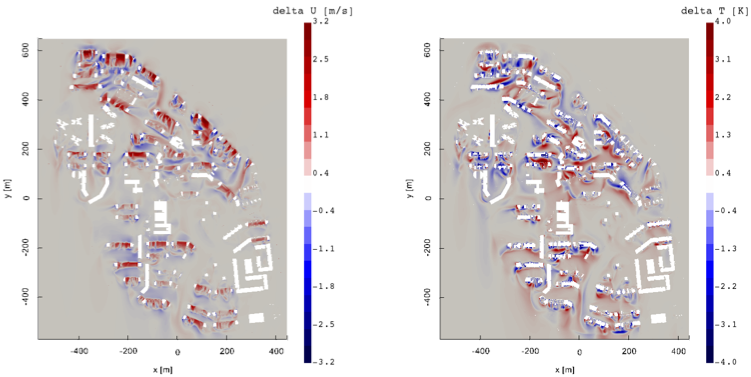

Predicting pedestrian wind comfort and thermal comfort with Large-Eddy Simulations in uDALES

Pedestrian wind and thermal comfort still remain an important topic in the development of future urban scenarios. Considering the current climate change conditions, with increased frequency in heat waves and extreme weather events, the way we design our cities can further impact their resilience and comfort. Computational fluid dynamics (CFD) approaches can help us improving and adapting future and current urban designs to maximize sustainability and comfort. To maximize the predictability capabilities approaches such as Large-Eddy Simulations LES can be used to resolve most of the urban scales and model uniquely the small scales.

Within this MSc thesis we will exploit the capabilities of open-source tools such as uDALES to predict wind and thermal comfort in real urban scenarios. The initial set-up focuses on using part of the Clementi neighbourhood in Singapore, which was already set-up by previous MSc thesis to run RANS simulations in Opsomer. Considering the demanding computational capabilities required by LES, this area can be potentially reduced, or other test cases can be also explored. Attendance of elective course GEO5015 in Q4 or similar CFD knowledge is required.

Contact: Clara Garcia-Sanchez, Ivan Pađen

Optimizing building mesh designs for computational fluid dynamics using machine learning

Since one of the major burdens when performing computational fluid dynamic simulations (CFD) is to set up a good mesh, improving the current capabilities to mesh automatically complex geometries would have a large impact for the computational fluid dynamics community. This task becomes really essential when geometries are complex, such as high resolution level of detail buildings, and severals hundreds of simulations need to be run to quantify uncertainties.

In this MSc thesis we will apply the automatic meshers available in OpenFOAM (SnappyHexMesh and cfMesh) and combined them with machine learning techniques to improve current mesh set-ups. We will start by simplified geometries with low level of detail, and increase progressively details. The results can potentially help us reducing the amount of time spent designing our city mesh, and thus allow us to perform faster analysis.

If you work on this topic, you can expect to learn about mesh generation aligned with CFD best practice guidelines, set-ups and flow simulations. Programming experience and interest is an advantage for this topic. Your work will require to implement source code for the analysis of the set-ups (in C++ or Python).

Contact: Clara García-Sánchez and Ivan Pađen

To mesh or not to mesh: immersed boundary methods and porosity in OpenFOAM

One of the major burdens when performing computational fluid dynamic simulations (CFD) is to set up a good mesh. This task becomes really time consuming when geometries are complex, such as high resolution buildings. There are a few strategies to avoid the usage of an explicit mesh, but using immersed boundary methods or porosity definitions which in a very general description allow to construct regular meshes and represent the buildings through forces.

In this MSc thesis we will apply the already developed immersed boundary method in OpenFOAM-extended and compare it with a porosity approach through several standarzied CFD study cases. The results can potentially help us reducing the number of buildings to be mesh explicitely, and therefore the amount of time spent designing our city mesh.

If you work on this topic, you can expect to learn about CFD best practice guidelines, set-ups and flow simulations. You will also work with real experimental wind and turbulence data recorded in wind tunnels. Programming experience and interest is an advantage for this topic. Your work will require to implement source code for the analysis of the set-ups (in C++ or Python).

Contact: Clara García-Sánchez and Ivan Pađen.

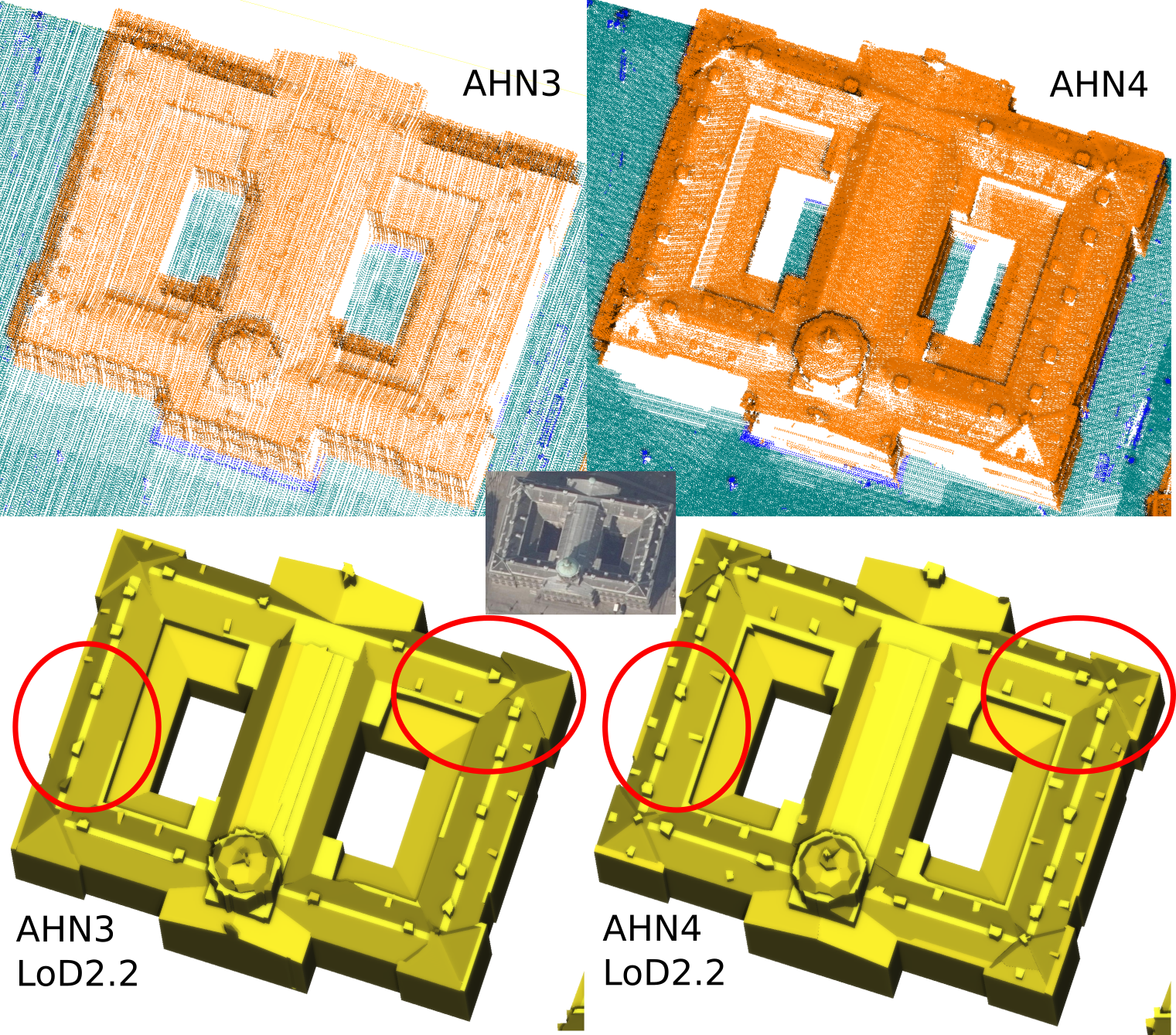

Semantically enriching the 3D BAG

With the 3D BAG we have LoD2.2 building models for the whole of the Netherlands. Unfortunately the semantics of these models is still very simplistic (only a very basic classification of wall/roof/floor surfaces is present). The goal of this project would be to develop an automatic method to semantically enrich these models by labeling rooftop structures such as chimneys, A/C units and dormers and/or detecting facade elements such as doors and and windows. This is to be achieved by analysing the geometry of the existing 3D BAG models, the source point cloud and/or (oblique) aerial photographs.

Programming required in python/C++.

Contact: Hugo Ledoux + Ravi Peters

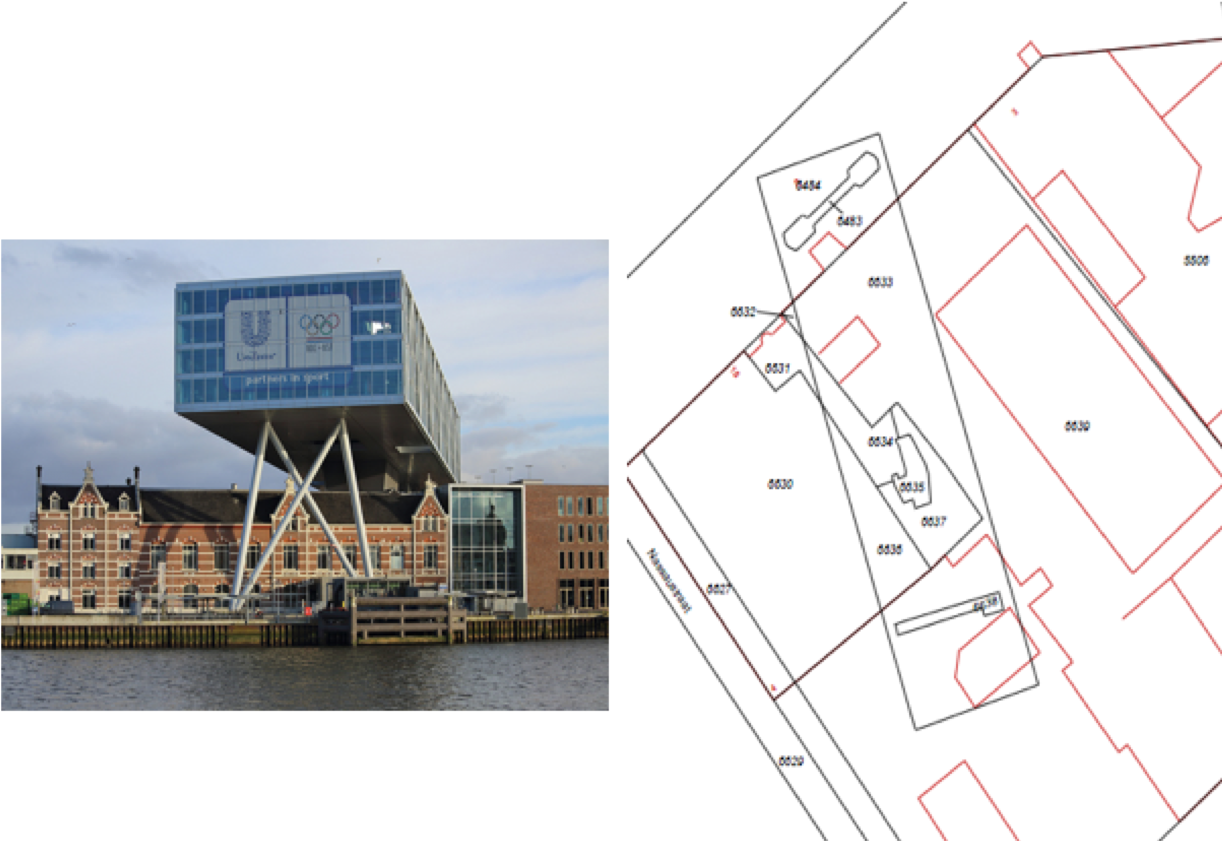

3D Cadastre

Since more than 15 years, lots of studies have been done on 3D Cadastre to register multilevel ownership in a transparent and proper way. In 2016, we realised the first 3D cadastral situation 3D cadastral registration in the Netherlands. However, there is still a gap between research and practice. In this research you will analyse how a Level of Detail Framework, that defines specifc solutions for specific 3d cadatsre problems may help to close the 3D cadastre research-to-practice gap. The idea is explained in this short paper

Contact: Jantien

Performance and robustness of software libraries for computational geometry

Software libraries for computational geometry underpin a lot of our research, but an in-depth comparison of how these different software libraries behave in terms of performance and robustness is not available. For example, the feasability of multi-disciplinary use of geometry in BIM/GIS integration and automated thermal analysis of IFC building models is largely shaped by the characteristics of the algorithmns offered in open source libraries such as CGAL and Open CASCADE. This research project is an opportunity to publish something novel, useful and relevant to many disciplines.

Contact: Thomas Krijnen or Ken Arroyo Ohori

Something with streaming TINs for massive datasets

The AHN3 dataset contains a lot of points (600+ billions), and while these are useful on their own, some applications would benefit from having a TIN, isocontours, objects extracted from them, etc.

You learned in GEO1015 how to create a Delaunay TIN, and for massive ones the theory about streaming geometries was explained.

The aim of this project is to extend the work already done (sst + one MSc thesis about simplification) and to add new operators useful for practitioners. Exactly what I am not sure yet, but if you like the challenge of dealing with several billions of points, then we can find a good topic. The main ones are creating grids with interpolation and isolines extraction.

Contact: Hugo Ledoux

Developing methods for edge-matching with customisable heuristics (geometric, topological and semantic)

The methodology will be studied from a use case of Statistics Netherlands (CBS).

CBS is responsible for the bi-annual publication of the land use register (in Dutch: Bestand Bodem Gebruik or BBG). In this dataset, ground level land use for areas of 1 ha or larger is classified into 20+ land use categories. The area demarcation and classification have, up till now, been done manually. In the manual process CBS uses a combination of aerial imagery and a selection of cadastral topographical maps. CBS is developing a new methodology to automatically combine topographical information and other administrative (register-based) datasets (with a manual fine-tuning post-process, if needed). By overlaying and prioritizing polyline-based planes from a set of different topographical data sources, adding attributes to these areas from administrative data sources, and applying a number of geo-processes, a new set of planar partitions is created. These automatically generated planar partitions will inevitably have some differences with the reference (manually coded) BBG year 2017, either in shape or in category. The challenge is on developing a method for describing and detecting important categorization and delineation issues, based on deviations from earlier versions of the BBG and developing (semi) automated solutions to solve these issues, in order to minimize the required manual post-processing. This also includes solving gaps, overlaps and disconnections, in the context of the neighboring areas. There are different heuristics feasible, an important one being the combination size and the nature of the deviating area. In such a heuristic, small differences in size combined with a less important categorization difference (e.g. street and living area) are of less importance than a big difference in size and an important difference (e.g. forest vs living area).

Contacts: Jantien Stoter + Ken Arroyo Ohori + someone at CBS

Modern metadata for CityJSON

The standard CityJSON–developed by us!–has some support for metadata. First its core has a few useful properties, and second there is an Extension (the MetadataExtended Extension) where most of the ISO19115 properties can be used. The issue is that ISO19115 is being replaced in practice by The SpatioTemporal Asset Catalog (STAC) specification and by the OGC API – Records. Those are targeted at imagery and (mostly) other 2D datasets. The aim of the project is to create a STAC extension so that 3D city models in CityJSON be indexed/searched, and to (potentially) modify CityJSON. The project is quite exploratory, and will necessitate to build a prototype on the web where the ideas are demonstrated; see this page for some relevant links and work done by other MSc Geomatics students. This projects fits in the new vision of the OGC to be “cloud-native”, see that interesting blog post. Why the photo of the dog? Because I thought everyone would skip reading this if they see “metadata” in the title…

Contact: Hugo Ledoux

Supporting earthquake risk assessment by 3D city models

Earthquake risk assessment models predict the probability of buildings being damaged due to earthquakes. These models make use of building typologies that describe the cluster of buildings with similar seismic vulnerabilities. The recent advances in digital transformation of the built environment presented new opportunities to conduct earthquake risk studies at individual building level instead of clustering similar buildings. Such a change in the risk modeling would have a tremendous effect on the studies related to finding collapse vulnerable buildings before a destructive earthquake. In this thesis, the MSc student will investigate how the required parameters defining the building typology can be automatically derived from 3D city models and if more parameters can be derived to refine the typology for improving the earthquake risk assessment models. Examples are: geometry-complexity of the roof (single or dual pitch), the height of the vertical walls and surface of roofs (to estimate the mass), height of the free standing veneer walls, storey-heights etc. For the research, a validation data set is available consisting of 400 buildings in Groningen for which the parameters were collected in a field survey.

Contact: Jantien Stoter, in collaboration with Ihsan Bal, Professor in Earthquake Resistant Structures, Hanze University of Applied Sciences Groningen & Research Centre for Built Environment NoorderRuimte.

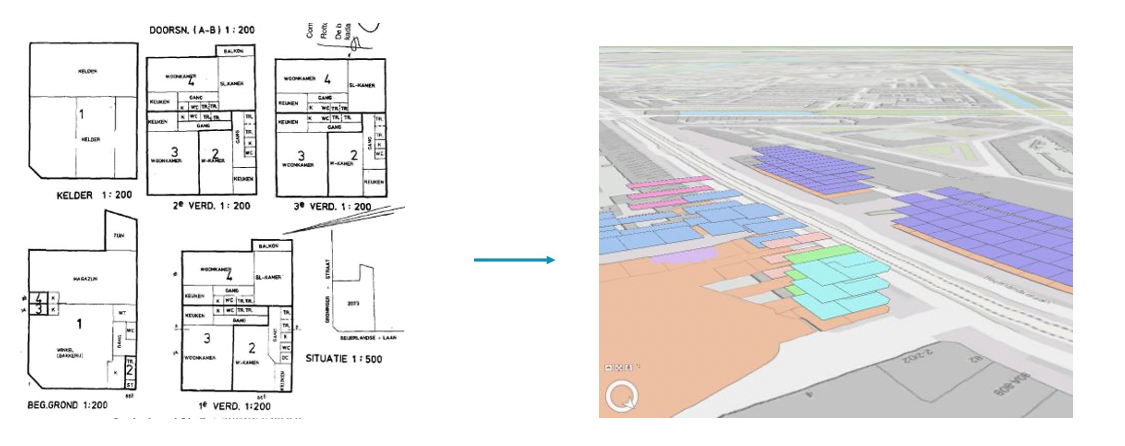

Reconstructing 3D apartment units from legal apartment drawings

Ownership rights concerning apartments are registered by The Netherland’s Cadastre (Kadaster) in deeds that divide complete apartment complexes into individual units, the so called ‘splitsingsaktes’. These (originally analague) deeds contain floor plans that show how the units are divided per floor. Kadaster would like to converting these scanned 2D floor plans into 3D geometries positioned in geographical space to obtain a subdivision of 3D building models into apartment units. Up till now, a pipeline was developed to vectorize the information from the 2D floor plans. The end results are 2D polygons describing the separate floors. To obtain the 3D geometry, the original building geometry needs to be reconstructed. This includes scaling and georeferencing the 2D polygon results, as well as finding ways to properly position the different floors of an apartment complex in 3D. The main research focus will be on the 3D reconstruction of the apartment units. Questions to address are: What (additional) information would be needed to georeference and scale the floor plans? Can information be obtained from the textual part of the deed for this? What would be proper ways to position building floor plans in 3D? Could the story heights be estimated?

Contact: Jantien Stoter, in collaboration with Kadaster

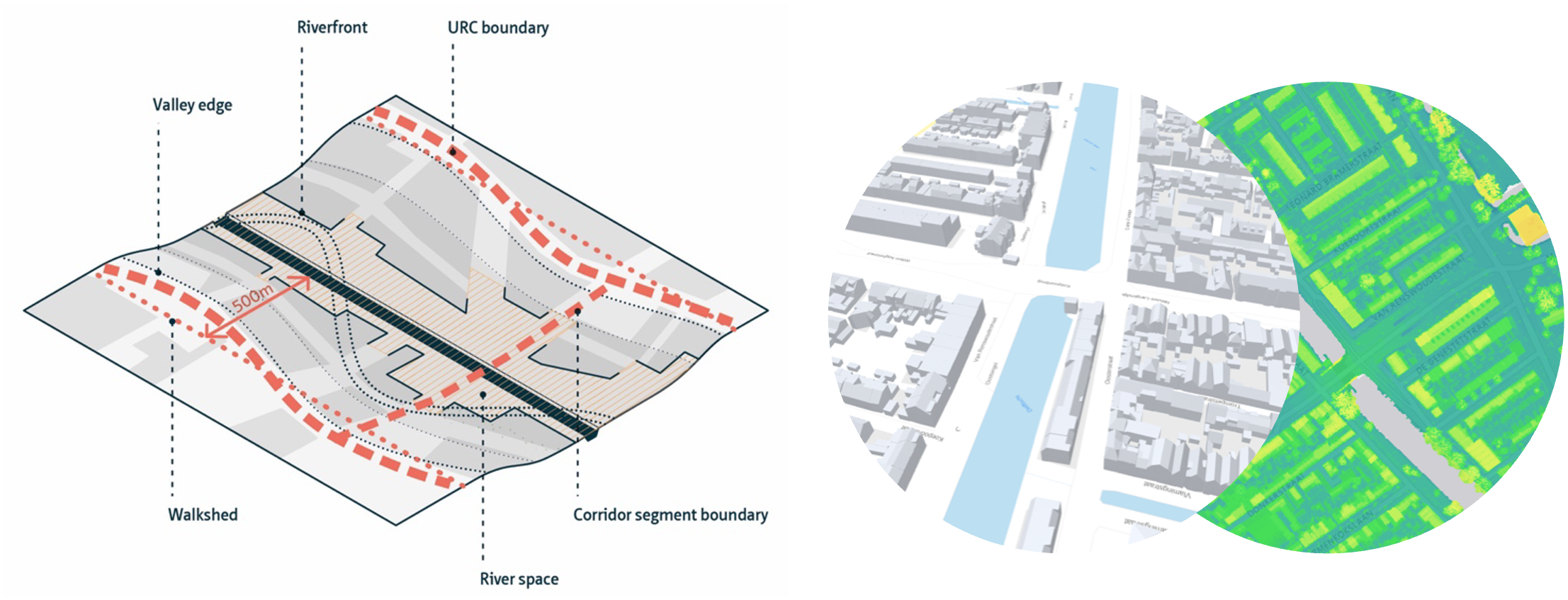

3D delineation of urban river spaces

A wide range of applications in riverside urban areas, including flood mitigation, transport planning, ecological restoration, and public space design, rely on an accurate spatial description of riverside urban spaces. While methods of spatial delineation based on 2D geospatial data exist Forgaci, 2018, a method for automated spatial delineation based on 3D data is missing. A 3D delineation method would enable to better capture the spatial qualities of urban river spaces.

This thesis will develop a 3D delineation method for urban river spaces adapting an existing 2D delineation method. The method will be based on 3D data 3D BAG, point cloud and other elevation data) for use in any riverside urban area where such data is available. The thesis will make as much as possible use of open data and will address challenges and opportunities regarding the scalability of the method within the Netherlands and globally.

Contact: Jantien Stoter and Claudiu Forgaci

Estimating noise pollution with machine learning?

The government needs to produce so-called “noise maps”, that is maps showing where the noise levels are higher than a certain threshold that is considered for the well-being and health of citizens (site of RIVM on the topic). These maps are created by using models of the noise, and not by measuring the values everywhere. These models are rather complex, and preparing and collecting the (3D) data necessary are time-consuming and intensive tasks (see a recent project by the 3d geoinformation group, and a Synthesis project in 2020).

The aim of this MSc thesis is to learn from those models (and/or samples collected on the ground) and to build a model with machine learning that will predict the noise pollution in an area.

I think that features related to the urban morphology (density of buildings, height of buildings, wideness of streets, etc), the distance to noise sources (roads, factories, etc), and vegetation could be good predictors. But I don’t know, this would be for you to tell me if this is indeed the case.

The project can be done with Python, scikit-learn, and crunching of many (3D) data about cities.

Contact: Hugo Ledoux

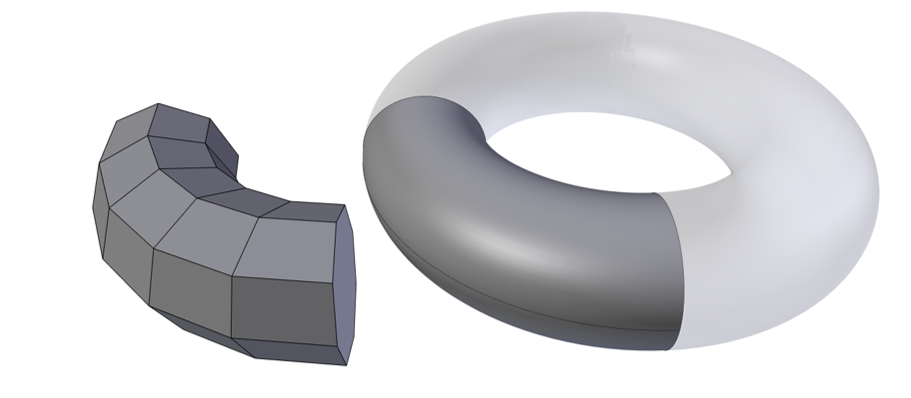

How can 3D alpha wrapping be best used to repair buildings?

The CGAL project has just released, in beta version, a new package: 3D alpha wrapping, which can be used to repair pretty much any 3D input. It guarantees that you’ll obtain a 2-manifold that is “watertight” and free of intersections.

As can be seen in the figure above (taken from their website), it works by refining the surface (and uses the alpha-shape concept) and thus to recover sharp edges (very frequent in 3D buildings) many Steiner points are added and we obtain a lot of triangles (which takes a lot of time to run, and those are unwanted).

The project would be to explore how we can either modify this algorithm so that it performs good for 3D buildings, or how can we post-process the output and remove points and/or simplify the mesh. One application of those repaired 3D models is that we can use them for CFD simulations, so we could focus on that application. Or be generic, this can be discussed.

Also, how the semantics of surfaces could be preserved from the input, or inferred for the output, is another aspect of the research.

The project must be done in C++ using CGAL.

Contact: Hugo Ledoux

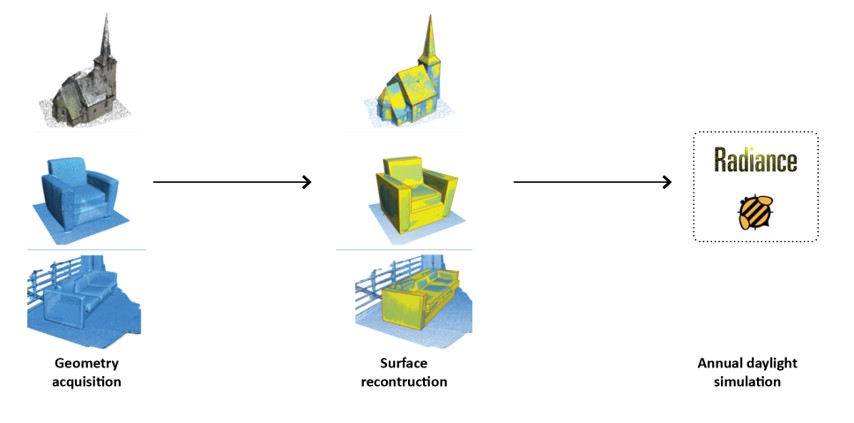

Automatic generation of digital twins for heritage buildings

Background and aim: The geometrical model is an essential part of daylight simulation in existing buildings. These models are commonly constructed with point-by-point field measurement and manual modeling in CAD environments, such as Rhinoceros and Sketchup. This workflow is costly for many buildings and projects and is a practical barrier to accurate daylight simulations and informed refurbishment decisions. Results from the novel techniques in point-cloud semantic segmentation (e.g., with convolutional neural networks) and light-weight polygonal reconstruction of various objects from scanned indoor point clouds have been promising and are expected to automate reconstruction tasks in relevant domains in the future, such as daylight. The goal of this project is to (semi-) automatically reconstruct the digital twin of a historic building and to evaluate the received daylight based on the requirements of heritage preservation.

Research question: How to build digital twins for heritage buildings automatically with minimum onsite cost?

Methods: Literature search to find potential pipelines and techniques for surface reconstruction. Numerical ray-tracing simulation. (potentially) a few site visits for data acquisition.

Final results: (a) Pipelines, algorithms, and workflows for automatic modeling of building interior for daylight simulation. (b) Software prototype for practitioners (optional).

Contact: Eleonora Brembilla, Nima Forouzandeh, Uta Pottgiesser, and Jantien Stoter

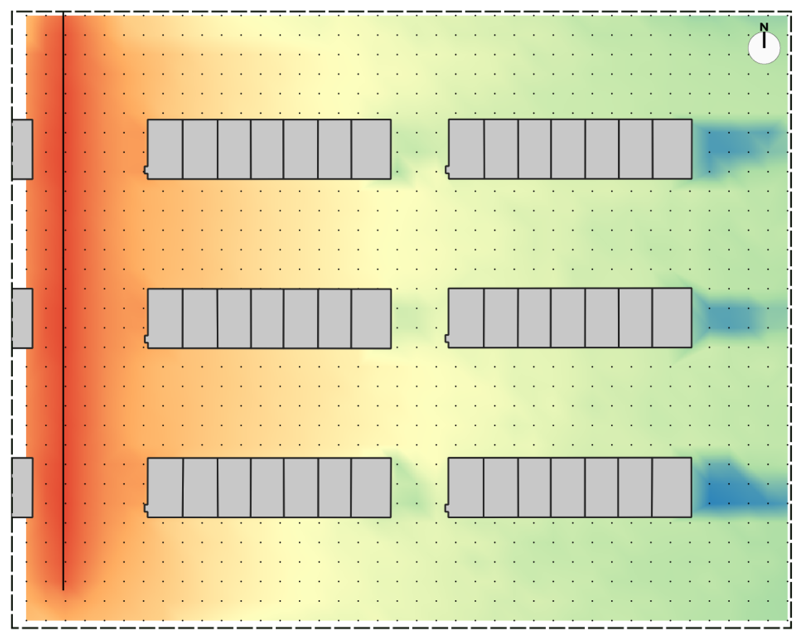

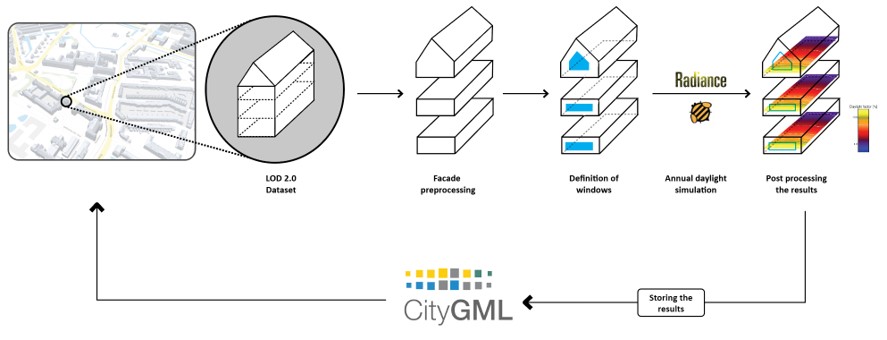

Urban building daylight modeling – improving city models

Background and aim: Decarbonization and improvement of the building stock cannot be realistically planned without considering the existing buildings. Decision-makers need accurate models on different levels of granularity for different types of decision-making. A crucial aspect of building performance is the availability of natural light in indoor spaces which has a direct impact on users’ well-being and comfort, as well as its influence on reducing electric lighting consumption. The aim of this project is an urban-level assessment of buildings in terms of their daylight performance. LOD2 geometry and typical material properties will be used as the key inputs and Radiance as the simulation engine.

Research question: To what extent do the existing building stock meet the requirements for daylight availability? How to efficiently model the existing building stock in urban level?

Methods: General literature search to find potential pipelines and techniques, and to understand city-level geometry data models (CityGML). Numerical simulation of daylight.

Final results: (a) Urban-level assessments of buildings daylight availability, or visual comfort. (b) Suggestions for policy-makers to improve daylight availability in existing buildings. (c) Suggestions (and implementation) for improving CityGML data model and its Application Domain Extension (ADE).

Contact: Eleonora Brembilla, Nima Forouzandeh, Camilo León-Sánchez, and Giorgio Agugiaro.

Reconstructing permanent indoor structures from multi-view images

Reconstructing 3D models of permanent structures of indoor scenes has many applications, e.g., renovation, navigation, and room layout design and planning. Traditionally methods require dedicated devices (e.g., laser scanners) to capture the indoor environments, which is only affordable to very limited users. They also require carefully positioning a scanner and registering the point clouds obtained at different locations. Recently developed image-based methods (i.e., MVS and its variants) are successful in the reconstruction of large-scale outdoor environments, but the major obstacle to applying such methods to indoor scenes is the lack of rich textures in indoor scenes, and thus insufficient image correspondences can be established to derive 3D geometry. This project focuses on exploring piece-wise planar prior knowledge about indoor scenes to achieve patch (i.e., planar region) correspondences between images. The core is to extend the existing multi-view theoretical framework to incorporate piecewise planar constraints in the reconstruction pipeline. The developed technique will enable the 3D surface reconstruction of not only texture-less indoor scenes but also low-texture piecewise planar objects in general.

Required skills: (1) Proficient in programming. (2) Enthusiastic about 3DV modeling and geometry processing.

Contact: Liangliang Nan

BuildingBlocks: Enhancing 3D urban understanding and reconstruction with a comprehensive multi-modal dataset

Deep learning research has facilitated significant advancements in large-scale urban scene understanding and reconstruction. However, current methods are limited to coarse levels of scene perception and 3D reconstruction. To bridge this gap and propel research and applications to the next level, fine-grained understanding and 3D reconstruction of urban buildings are necessary. Unfortunately, the lack of suitable datasets for training powerful neural networks hinders progress in this area.

This research aims to bridge this gap by introducing BuildingBlocks, a multi-modal, feature-rich, large-scale, and detailed 3D building dataset. BuildingBlocks encompasses 3D building models at LoD3+ levels, corresponding point clouds, multi-view images, camera parameters, and wireframe models for several expansive urban scenes, with fine-grained annotations at the semantic, instance, and part levels for all modalities. With these multi-modal data sources and rich correspondences between different modalities, this project will benchmark state-of-the-art methods and develop novel techniques for highly automated and detailed 3D building reconstruction.

In short, BuildingBlocks will provide a valuable resource for advancing research in deep learning-based urban understanding and 3D reconstruction, enabling fine-grained analysis and detailed modeling of urban buildings for various applications.

Required skills: (1) Proficient in programming. (2) Enthusiastic about 3D modeling and deep learning.

Contact: Liangliang Nan

Holistic indoor scene understanding and reconstruction

This project is for MSc students interested in cutting-edge 3D vision and scene understanding.

Holistic indoor scene reconstruction from a single image (or a few images), especially using implicit representations, pushes the boundaries of what machines can perceive from minimal input. This project explores high-fidelity recovery of both objects and complex room geometries, enabling applications in robotics, AR/VR, and smart environments. Whether your interest lies in detailed shape modeling, occlusion-aware perception, or efficient scene approximation, there’s ample room for innovation. You can choose to focus on the full reconstruction pipeline or zoom in on specific aspects like scene layout understanding or surface reconstruction. This topic provides a solid foundation for research that is both technically rigorous and highly relevant to emerging real-world applications.

Required skills: (1) Enthusiastic about 3D modeling and deep learning. (2) Proficient in programming.

Contact: Liangliang Nan