Manhattan-world Urban Reconstruction from Point Clouds

ECCV 2016

Minglei Li 1, 2 Peter Wonka 1, Liangliang Nan 1

1 King Abdullah University of Science and Technology (KAUST), KSA

2 Nanjing University of Aeronautics and Astronautics (NUAA), China

Abstract

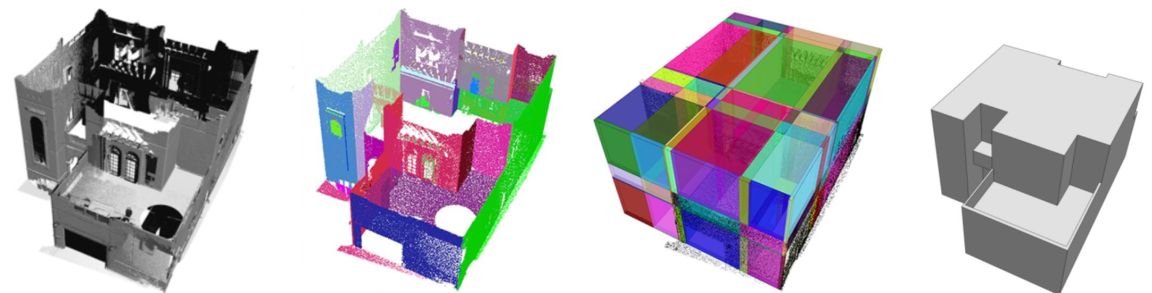

Manhattan-world urban scenes are common in the real world. We propose a fully automatic approach for reconstructing such scenes from 3D point samples. Our key idea is to represent the geometry of the buildings in the scene using a set of well-aligned boxes. We first extract plane hypothesis from the points followed by an iterative refinement step. Then, candidate boxes are obtained by partitioning the space of the point cloud into a non-uniform grid. After that, we choose an optimal subset of the candidate boxes to approximate the geometry of the buildings. The contribution of our work is that we transform scene reconstruction into a labeling problem that is solved based on a novel Markov Random Field formulation. Unlike previous methods designed for particular types of input point clouds, our method can obtain faithful reconstructions from a variety of data sources. Experiments demonstrate that our method is superior to state-of-the-art methods.

Overview

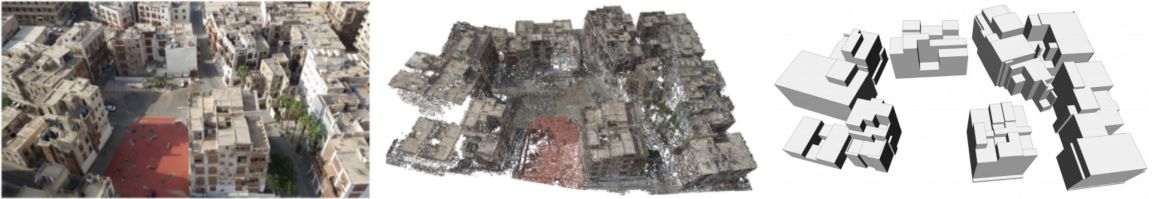

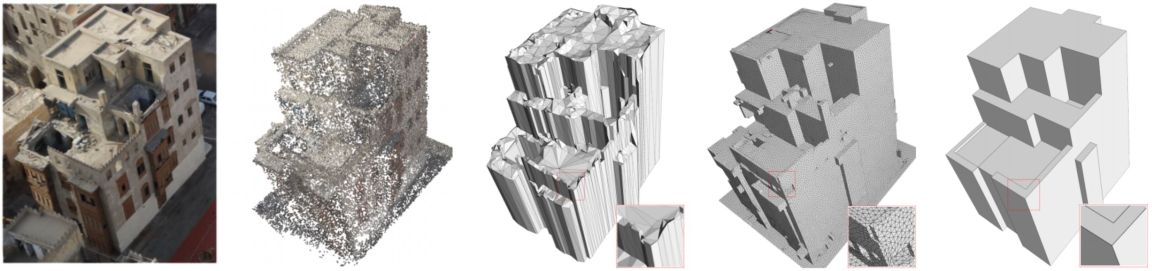

Results

Figure 3: Comparisons with two state-of-the-art methods. From left to right: an aerial photograph of the building, MVS point cloud, reconstruction result using the 2.5D dual contouring method [33], the result from L1-based polycube method [9], ours.

Download

Poster [3.7M]

Video [28M]

Data & Results [7M]

Code & Executable [51M]. Code also available on GitHub.

Acknowledgements

We thank the reviewers for their valuable comments, Dr. Neil Smith for providing us the data used in Figure 7, and NVIDIA Corporation for the donation of the Quadro K5200 GPU used for rendering the point clouds. This work was supported by the Office of Sponsored Research (OSR) under Award No. OCRF-2014-CGR3-62140401 and the Visual Computing Center at KAUST. Minglei Li was partially supported by NSFC (61272327).

BibTex

@inproceedings {

li2016boxfitting,

title = {Manhattan-world Urban Reconstruction from Point Clouds},

author = {Li, Minglei and Wonka, Peter and Nan, Liangliang},

booktitle = {ECCV},

year = {2016}

}